Let’s start off with what we mean when we say “computer”…

When we use the term “computer”, we don’t always mean physical computer hardware. We often use that term when referring to software, apps, kiosks, websites, operating systems, or social media. For the sake of this discussion, a “computer” is any hardware and software working together to address a user/customer need. Since we are all users or customers at some point, we can relate to the benefits these tools provide. In fact, we have been conditioned to believe that our digital tools are designed to “meet our needs”. While this may be true in part, it’s important to consider how software/hardware/apps are developed.

Let’s start with the obvious. These tools are created by for-profit businesses. They have to make money. Secondly, most of these tools (at least the most popular ones) are offered to consumers at no cost. As we discussed last week, data and attention are the new currency. Therefore, companies must design these free digital tools to collect data, attention, or both from their users in order to be profitable. While services and apps like Facebook, Instagram, and WhatsApp offer valuable utility for the people who rely on them, they exist on our computers and phones to ensure revenue flow for their creators.

Some of the debate around privacy has been obscured by how we view the digital technology we use. When people learn that a free email service or social media site is scanning the content of their email, or monitoring their behavior, some respond with:

“Hey – it’s not like it’s a person looking through my email or watching me. It’s a computer.”

The implication is that if a person were looking through our personal data, he or she would have an understanding of what we’ve done and would judge us. We might be embarrassed because of what that person might have seen. However, we view the “computer “ as an impartial machine, looking for one thing and incapable of moral judgment. While computers may be single-minded, they are far from impartial. They will always represent the best interests of the people that wrote their instructions. And right now, the people who are writing the instructions that direct our digital tools are most keenly interested in collecting data about us. While the data they collect is primarily looked at in aggregate, it can be drilled down to the individual. Even if the data is “anonymized”, modern data analytics are capable of revealing so much about our behavior & personality that our names are ultimately irrelevant.

Which matters more – the fact that Google knows where I was Friday night? Or that Google understands the behavioral triggers that motivate me to go to the places I go?

We do not need to be concerned about computers morally “judging” the things we choose to do as a person might (“Michael is a bad person for going to a casino”). However, we do need to be aware that people ARE using computers to empirically judge our future behavior with the data they collect (“Data correlations show with 85% certainty that people like Michael who go to casinos are more likely to get divorced, not vote, and default on bank loans.”).

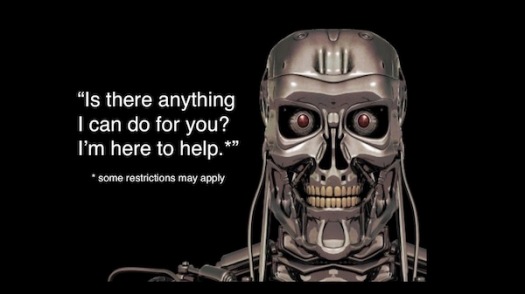

Therefore, whenever we come face to face with an app, or use social media, we should reconsider what we are interacting with. Today, any computer that runs on software should be considered a service representative for the company that designed it. A representative who will ultimately act in the best interest of its creator. Therefore, be aware of what you do with it and how you use it.

To learn more about how Christians can better live with technology, check out my book, “God, Technology, & Us” – available now.

Got a different take? Share your thoughts in the comments!